Is this tool helpful?

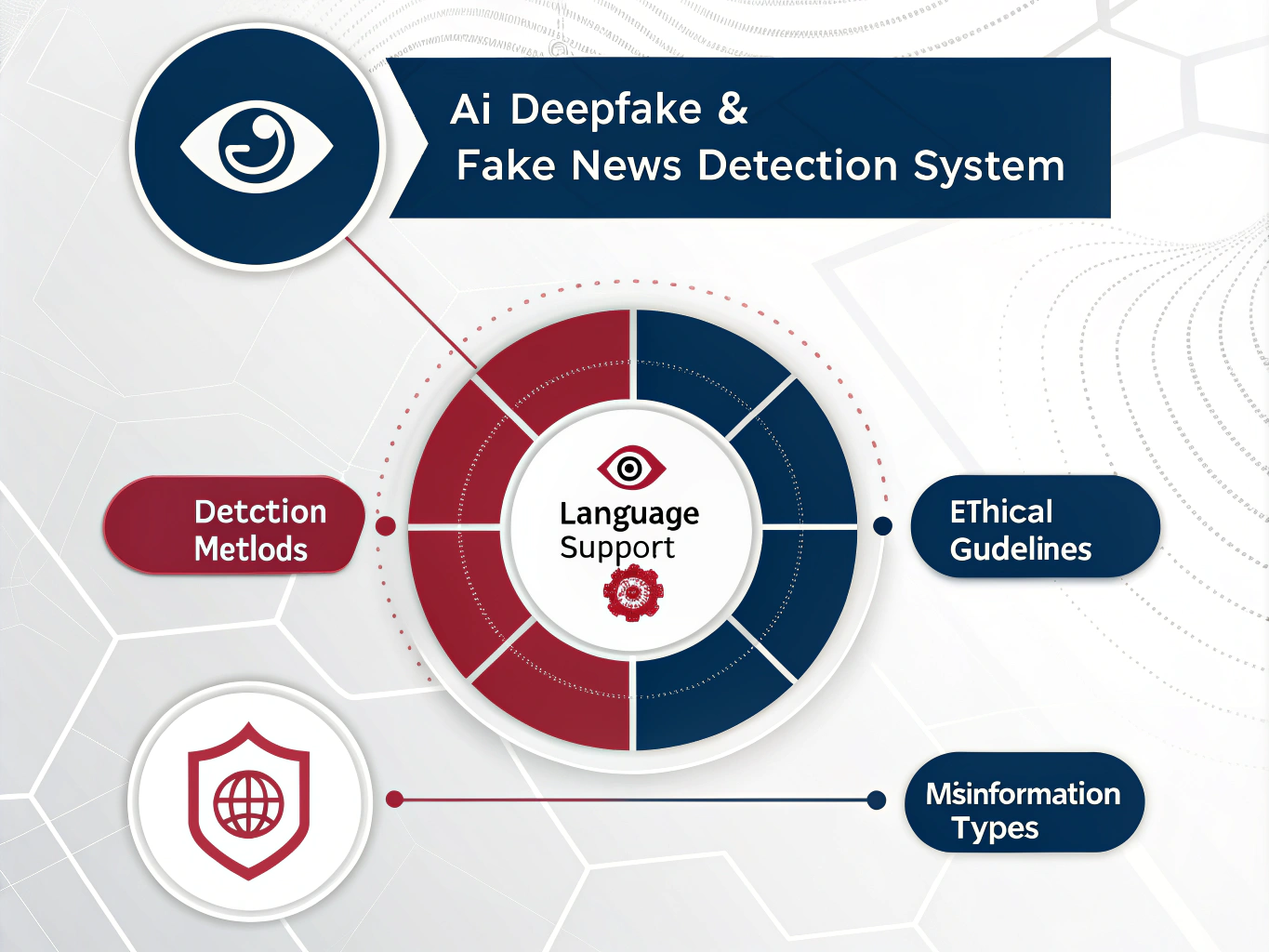

How to Use the AI Deepfake and Fake News Detection System Design Tool Effectively

Step-by-Step Instructions

Use the tool to build a customized AI system design that suits your needs for detecting deepfakes and fake news. Follow these steps:

- Identify the Target Audience: Enter the main users or stakeholders of your AI system. For instance, you can input “Online content moderators and fact-checking platforms” or “Health organizations and public awareness groups”.

- Specify Language Support: List the languages your system should handle, separated by commas. For example, “German, Italian, Dutch” or “Russian, Bengali, Swahili”.

- Define Misinformation Types: Describe the misinformation categories you want the system to focus on. You might enter “Fake celebrity endorsements, manipulated financial reports” or “False medical advice, viral conspiracy narratives”.

- Select Detection Methods: Indicate preferred technical approaches such as “Deep learning-based video analysis, sentiment analysis on social media text” or “Cross-platform metadata verification, audio signal authentication”.

- Set Ethical Guidelines: Outline principles that the AI should follow during operation. Examples include “Ensure data anonymization and user consent” or “Maintain neutrality and avoid censorship”.

- Generate the AI System Design: Click the generate button to receive a detailed plan tailored to your inputs.

After submitting, the tool will analyze your information and provide a comprehensive AI system design. Review the output in the result section and copy it if needed for implementation or further customization.

What Is the AI Deepfake and Fake News Detection System Design Tool?

Tool Definition and Purpose

This AI system design tool helps you create tailored frameworks to detect and combat misinformation — including deepfakes and fake news — across diverse digital platforms. It uses artificial intelligence concepts to generate detailed system blueprints based on your specific needs.

The tool assists organizations, media outlets, researchers, and educators in building effective detection systems. By entering details such as your target audience, supported languages, types of misinformation, and ethical requirements, you receive a customized AI design that fits your unique context.

Key Benefits of Using This Tool

- Personalized AI Designs: Tailor your detection system to specialized audiences and content types.

- Multilingual Support: Address misinformation in multiple languages for global impact.

- Ethical AI Implementation: Build transparency, privacy, and fairness into your system.

- Comprehensive Detection Strategies: Combine various AI techniques like natural language processing and image analysis.

- Efficient Development: Save time by automating the initial system design process.

- Adaptability: Modify or extend the generated designs as your needs evolve.

Practical Applications of the AI Deepfake and Fake News Detection System Design Tool

Boosting Digital Trust and Literacy

The tool promotes digital literacy by enabling you to develop AI systems that:

- Help users identify manipulated audiovisual content

- Reduce the circulation of false information on social media

- Support informed decision-making through credible fact verification

- Educate audiences on spotting misinformation tactics

Driving AI Innovation for Misinformation Detection

With this tool, you can:

- Design AI models that combine text, audio, and video analysis

- Incorporate cutting-edge machine learning algorithms for accuracy

- Encourage collaboration across technology and social science fields

- Investigate new forms of digitally manipulated content

Enhancing Cybersecurity and Content Integrity

The AI systems designed with this tool help safeguard digital environments by:

- Detecting disinformation campaigns quickly

- Preventing identity fraud with deepfake detection

- Maintaining trust in online communications and media sources

- Supporting law enforcement and digital forensics efforts

Addressing Specific Needs with Custom AI Systems

Targeting Misinformation Challenges

Your AI system can be designed to:

- Identify synthetic videos and audio with high precision

- Detect fraudulent news stories and misleading social media posts

- Monitor misinformation trends across platforms in real time

- Alert users and decision-makers about verified false content

Creating Solutions for Various Sectors

You can tailor the tool to fit specific applications, such as:

- News Outlets: Verify news sources and authenticate user-generated content

- Government Security: Counteract disinformation affecting public safety

- Social Networks: Automatically flag suspicious or manipulated posts

- Educational Programs: Develop interactive tools to teach media literacy

Embedding Ethical Guidelines

The AI designs integrate ethical principles to:

- Protect user privacy and data security

- Ensure transparency in content flagging and decision-making

- Maintain fairness and mitigate bias in AI outputs

- Respect legal and social norms related to speech and censorship

Common Use Cases for AI Deepfake and Fake News Detection Systems

Political Misinformation Monitoring

Design AI tools to:

- Analyze campaign materials for false claims

- Validate political statements using verified databases

- Detect coordinated misinformation across networks

- Provide real-time fact checks during live events

Corporate Reputation Management

Build AI systems that:

- Scan online channels for fake reviews and manipulated content

- Flag deepfake videos impersonating company executives

- Alert PR teams about emerging misinformation threats

- Support swift response to protect brand image

Advancing Media Literacy Education

Develop AI-driven educational platforms that:

- Provide practical exercises to spot fake news and deepfakes

- Create simulated examples of manipulated media for learning

- Offer individualized feedback to improve critical analysis skills

- Track progress to enhance digital literacy over time

Frequently Asked Questions (FAQ)

1. What misinformation types can this AI system detect?

The system can identify a range of misinformation including deepfake videos and images, fabricated news articles, misleading posts on social media, and tampered audio clips. You choose the specific types via the “Misinformation Types” input.

2. How does the system handle evolving misinformation tactics?

The AI design includes mechanisms for continuous updates, such as retraining with new data, transfer learning, and incorporating the latest research to stay effective against emerging deepfake and fake news techniques.

3. Can the AI system integrate with existing platforms?

Yes. The designs offer integration options with content management systems, social media platforms, and monitoring tools tailored to your target audience and usage scenario.

4. How does the AI support multiple languages?

By specifying languages in the “Language Support” field, the AI system design incorporates multilingual text and media analysis methods, including language-agnostic features, machine translation, or custom models for each language.

5. Are the AI’s decisions explainable?

Explainability is built into the system with features like confidence scores, detailed content analysis explanations, and visual highlighting of suspicious elements, helping users understand why content is flagged.

6. How can users provide feedback on detection results?

The generated design usually includes feedback channels allowing users to report false positives and negatives. This helps improve accuracy by adapting the system over time.

7. Can the AI system be customized for different industries?

The tool supports industry-specific tailoring, letting you create AI systems suited for sectors like healthcare, finance, education, or government, addressing unique misinformation challenges within each area.

Important Disclaimer

The calculations, results, and content provided by our tools are not guaranteed to be accurate, complete, or reliable. Users are responsible for verifying and interpreting the results. Our content and tools may contain errors, biases, or inconsistencies. Do not enter personal data, sensitive information, or personally identifiable information in our web forms or tools. Such data entry violates our terms of service and may result in unauthorized disclosure to third parties. We reserve the right to save inputs and outputs from our tools for the purposes of error debugging, bias identification, and performance improvement. External companies providing AI models used in our tools may also save and process data in accordance with their own policies. By using our tools, you consent to this data collection and processing. We reserve the right to limit the usage of our tools based on current usability factors.